Table of contents

Open Table of contents

- Introduction

- AI Services

- Data Security

- Prompt Engineering

Introduction

As I sit here on a somewhat cold British morning as our country shimmies into Autumn, I’ve just watched the Microsoft Wave 2 announcement, and I’m writing this blog post ready for this afternoon. It is clear that Microsoft is increasing its focus and work on Copilot along with its journey to integrate Generative AI into the modern workday through the tools we interact with an hour on the hour. Yet I still think there is value in understanding how to make the most of Large Language Models as consumers.

If you’re anything like me, who regularly looks through job adverts in the industry to see what the state of play is in our sector, then you might have come across the role of ‘Prompt Engineer’ arising in our humble capital of London. I’m not entirely sure if prompt engineering constitutes its own job role, but I do believe that practical prompt engineering and understanding how to interact efficiently with language models is swiftly becoming a soft skill worth having.

To make sure I have explain what ‘Prompt Engineering’ is adequately let me say this: It is typically the practice of crafting precise and structured inputs to optimise the quality of outputs generated by AI models, such as those based on machine learning or natural language processing (NLP). As AI increasingly integrates into various industries, from content creation to customer service, prompt engineering is becoming a genuine soft skill for ensuring relevant, accurate, and valuable responses from AI systems.

With that in mind, this blog will go over some of the AI services out there, some of the data security considerations to consider when using your organisation’s data within prompts, and then effective prompt engineering techniques and why they work.

AI Services

In the changing landscape of artificial intelligence, several significant providers offer robust AI services tailored to diverse business needs. Understanding the technical nuances of these services is crucial for selecting the right platform for your applications. Below is an overview of some leading AI service providers and their offerings:

OpenAI

Services Offered:

- GPT Models: Provides access to state-of-the-art language models like GPT-4, which can generate human-like text, perform translations, summarise content, and more. Don’t forget their recent launch of Codename Strawberry, named o1, which can think and contextualise the conversation and its reasoning in its responses.

- Codex: I Specialise in programming tasks and assist in code generation and debugging.

- DALL·E: Enables the generation of images from textual descriptions, useful for creative and design applications.

Technical Features:

- API Access: RESTful APIs allow seamless integration with various applications and platforms.

- Fine-Tuning: Users can fine-tune models on proprietary datasets to tailor outputs to specific use cases.

- Scalability: Supports high-throughput applications with robust infrastructure to handle large volumes of requests.

- Language Support: Extensive support for multiple languages, enhancing global applicability.

Google Gemini

Services Offered:

- Gemini LLM: A versatile language model designed for various NLP tasks, including text generation, sentiment analysis, and language translation.

- Vertex AI Integration: Seamlessly integrates with Google’s Vertex AI for end-to-end machine learning workflows.

Technical Features:

- Tensor Processing Units (TPUs): Utilises TPUs for accelerated training and inference, offering high performance for demanding tasks.

- Custom Training Pipelines: Allows users to build and deploy custom training pipelines tailored to specific requirements.

- Data Integration: Deep integration with Google Cloud Storage and BigQuery for efficient data management and processing.

- Security Protocols: This implements advanced security measures, including encryption at rest and in transit and robust access controls.

Microsoft 365 Copilot

Services Offered:

- Copilot Integration: Embeds AI capabilities directly into Microsoft 365 applications like Word, Excel, and Outlook to enhance productivity through intelligent suggestions and automation.

- Power Platform Integration: Leverages the Microsoft Power Platform to build custom AI-driven applications and workflows.

Technical Features:

- Microsoft Graph API: This API uses Microsoft Graph to access a vast array of organisational data securely and efficiently.

- Azure AI Services: Integrates with Azure Cognitive Services to provide advanced functionalities like computer vision, speech recognition, and language understanding.

- Compliance and Governance: Adheres to stringent compliance standards, ensuring that AI-driven processes meet regulatory requirements.

- Developer Tools: Provides SDKs and tools for developers to extend and customise AI capabilities within the Microsoft ecosystem.

Amazon Web Services (AWS) AI

Services Offered:

- Amazon SageMaker: A comprehensive service for building, training, and deploying scale-based machine learning models.

- Amazon Comprehend: Offers natural language processing capabilities for text analysis and sentiment detection.

- Amazon Rekognition: Provides image and video analysis services for object detection, facial recognition, and content moderation.

Technical Features:

- Managed Infrastructure: Fully managed services that handle the underlying infrastructure, allowing developers to focus on model development.

- AutoML Capabilities: Enables automated model building and tuning, reducing the need for extensive machine learning expertise.

- Integration with AWS Ecosystem: Seamlessly integrates with other AWS services like Lambda, S3, and DynamoDB for comprehensive application development.

- Security and Compliance: Ensures data protection through encryption, access management, and compliance with standards like HIPAA and GDPR.

IBM Watson

Services Offered:

- Watson Assistant: Develops conversational agents and chatbots with advanced dialogue management.

- Watson Discovery: Facilitates data extraction and insights from large volumes of unstructured data.

- Watson Natural Language Understanding: Provides text analysis for entity recognition, sentiment analysis, and keyword extraction.

Technical Features:

- AI Model Customisation: Allows extensive customisation of AI models to fit specific business needs and domains.

- Hybrid Cloud Deployment: Supports deployment on-premises, on IBM Cloud, or in hybrid environments for greater flexibility.

- Robust API Suite: Offers a wide range of APIs for integrating AI functionalities into various applications and services.

- Explain-ability Tools: Provides tools to interpret and understand AI model decisions, enhancing transparency and trust.

Anthropic’s Claude

Services Offered:

- Claude Models: Designed to prioritise safety and interpretability, Claude offers advanced conversational AI capabilities for applications requiring nuanced understanding and ethical considerations.

- Claude for Enterprises: Tailored solutions for businesses needing secure and reliable AI integrations with enhanced control over model behaviours.

Technical Features:

- Constitutional AI Framework: This framework employs a unique approach to align AI behaviours with human values and ethical guidelines, reducing harmful outputs.

- API Access: Provides robust RESTful APIs for easy integration into existing systems and workflows.

- Fine-Tuning Capabilities: This feature allows organisations to fine-tune Claude models based on proprietary data to better suit specific industry needs.

- Scalability and Performance: Optimised for high throughput and low latency, ensuring efficient performance in real-time applications.

- Safety Controls: Includes advanced safety mechanisms to prevent misuse and ensure compliant AI interactions.

GROK

Services Offered:

- GROK AI Platform: A specialised platform focused on contextual understanding and real-time data processing, ideal for applications requiring immediate and accurate responses based on dynamic inputs. GROK Analytics provides deep insights and analytics capabilities, enabling businesses to leverage AI for data-driven decision-making.

Technical Features:

- Real-Time Processing: It is Capable of handling and analysing data streams in real-time, making it suitable for applications like live customer support and instant data analysis.

- Contextual Understanding: Utilises advanced algorithms to maintain context over extended interactions, ensuring coherent and relevant responses.

- Integration Flexibility: It supports various integration methods, including APIs, SDKs, and webhooks, allowing seamless incorporation into diverse tech stacks.

- Customisable Workflows: This feature enables businesses to define and customise AI workflows to match specific operational requirements and use cases.

- Robust Security: Implements comprehensive security measures, including end-to-end encryption and secure authentication protocols, to protect sensitive data and ensure compliance.

Data Security

Data security is critical when integrating AI services into your organisation’s workflows. Handling sensitive information requires a comprehensive understanding of how different AI platforms manage data privacy, encryption, access controls, and compliance with regulatory standards. Below is a more in-depth look at data security considerations for leading AI service providers:

OpenAI Security

Data Usage and Privacy:

- Temporary Chats: OpenAI’s “Temporary Chats” feature ensures that conversation data is not used to further training its models, enhancing privacy and control over user data.

- Data Retention Policies: For enterprise customers, OpenAI offers customisable data retention policies through contractual agreements. These policies allow organisations to specify how long their data is stored and under what conditions it can be accessed.

Security Measures:

- Encryption: Data is encrypted at rest and in transit using industry-standard protocols like TLS 1.2+ and AES-256 encryption.

- Access Controls: Implements role-based access control (RBAC) only to restrict data access to authorised personnel.

- Compliance: Adheres to GDPR, CCPA, and HIPAA regulations, providing compliance certifications upon request.

- Audit Logs: Maintains detailed audit logs for monitoring access and changes to data, facilitating transparency and accountability.

Google Gemini Security

Data Usage and Privacy:

- Data Training Policies: Google Gemini does not guarantee that user conversations will not be used to train its language models unless explicitly contracted otherwise. Enterprises concerned about data privacy must negotiate specific terms to ensure data exclusivity.

- Data Anonymisation: Implements data anonymisation techniques to reduce the risk of personal data exposure during processing.

Security Measures:

- Encryption: This method utilises encryption for data at rest and in transit, employing protocols such as TLS and AES-256.

- Identity and Access Management (IAM): Advanced IAM policies allow precise control over who can access data and services within the organisation.

- Compliance Certifications: Complies with various international standards, including ISO 27001, SOC 2, and GDPR, ensuring robust data protection frameworks.

- Vulnerability Management: Regular security assessments and penetration testing to identify and mitigate potential vulnerabilities.

Microsoft 365 Copilot Security

Data Usage and Privacy:

- Data Sovereignty: Ensures that prompts, responses, and any data accessed through Microsoft Graph are not used to train foundation LLMs, maintaining strict data sovereignty for enterprises.

- Data Isolation: Implements data isolation techniques to prevent cross-contamination between organisational data.

Security Measures:

- Advanced Threat Protection (ATP): Provides ATP to detect and mitigate malware, phishing, and ransomware threats.

- Multi-Factor Authentication (MFA): Enforces MFA to add an extra layer of security for accessing AI services and data.

- Compliance and Governance: Supports comprehensive compliance with global regulations, including GDPR, CCPA, and HIPAA, through built-in compliance tools and frameworks.

- Secure Multi-Tenant Architecture: This architecture ensures that each tenant’s data is securely isolated in a shared environment, preventing unauthorised access.

Azure OpenAI Security

Data Usage and Privacy:

- Sovereign Data Control: Azure OpenAI allows enterprises to deploy AI resources within their Azure tenant, ensuring that data remains within the organisation’s controlled environment.

- Custom Environments: Enterprises can build bespoke development environments, such as custom UI portals, to manage and utilise OpenAI’s capabilities without exposing data to external training processes.

Security Measures:

- End-to-End Encryption: This ensures data is encrypted during transmission and storage, using protocols like TLS and AES-256.

- Tenant Isolation: This feature implements strict tenant isolation to prevent data leakage between different organisational units or clients within the same Azure environment.

- Compliance Certifications: Azure OpenAI meets numerous compliance standards, including SOC 2, ISO 27001, and FedRAMP, assuring robust data protection practices.

- Custom Security Policies: Enterprises can define and enforce custom security policies, including data access controls, encryption standards, and monitoring protocols, tailored to their needs.

Amazon Web Services (AWS) AI Security

Data Usage and Privacy:

- Data Ownership: AWS maintains that customers retain full ownership of their data, which is not used to train AWS models unless customers explicitly opt-in.

- Data Processing Agreements: Offers Data Processing Agreements (DPAs) that outline data handling practices and ensure compliance with regulatory requirements.

Security Measures:

- Comprehensive Encryption: Provides encryption for data at rest using AWS Key Management Service (KMS) and for data in transit using TLS.

- Fine-Grained Access Controls: Utilises IAM policies to grant least-privilege access to resources, minimising the risk of unauthorised data access.

- Continuous Monitoring: Implements continuous monitoring and logging through services like AWS CloudTrail and Amazon GuardDuty to detect and respond to security incidents in real-time.

- Compliance Programs: AWS AI services comply with a wide range of international and industry-specific standards, including GDPR, HIPAA, and PCI DSS.

IBM Watson Security

Data Usage and Privacy:

- Data Confidentiality: IBM Watson ensures that customer data is treated with the highest level of confidentiality and is not used for purposes beyond the agreed-upon services.

- Customisable Data Policies: Allows organisations to define their data handling and retention policies through contractual agreements.

Security Measures:

- Advanced Encryption Standards: This standard employs strong encryption protocols for data at rest and in transit, such as AES-256 and TLS 1.2+.

- Robust Access Management: Implements stringent access controls and identity verification processes to secure data access.

- Compliance and Auditing: Adheres to global compliance standards, including GDPR, HIPAA, and FedRAMP, and provides comprehensive auditing capabilities to track data access and modifications.

- Secure Development Lifecycle: It follows a secure development lifecycle (SDL) to ensure that security is integrated into every phase of product development, from design to deployment.

Anthropic’s Claude Security

Data Usage and Privacy:

- Ethical Data Handling: Claude is designed with a focus on ethical data usage, ensuring that user interactions are handled with the utmost privacy and not used beyond the scope of the service.

- No Training Data Inclusion: Anthropic guarantees that data processed by Claude is not utilised for further training or model improvement unless explicitly agreed upon in enterprise contracts.

- Data Minimisation: Implements data minimisation principles, collecting only the data necessary for service provision and ensuring its secure disposal after use.

Security Measures:

- Encryption: Employs robust encryption standards (TLS 1.2+ and AES-256) for data both at rest and in transit.

- Access Controls: This system utilises granular access controls to ensure that only authorised personnel can access sensitive data.

- Compliance Adherence: Complies with major data protection regulations, including GDPR and CCPA, ensuring that data handling practices meet legal requirements.

- Audit Trails: Maintains comprehensive audit logs to monitor data access and modifications, enhancing transparency and accountability.

GROK Security

Data Usage and Privacy:

- Contextual Data Processing: GROK processes data in real-time, focusing on maintaining contextual relevance without storing personal or sensitive information beyond the necessary scope.

- Data Exclusivity for Enterprises: Offers options for enterprises to ensure that their data remains exclusive and is not used for any purpose beyond the immediate service delivery.

- Anonymisation Techniques: Implements advanced anonymisation techniques to protect personal data during processing and minimise privacy risks.

Security Measures:

- Real-Time Encryption: This feature ensures that all data transmitted and processed in real time is encrypted using industry-standard protocols like TLS and AES-256.

- Secure Authentication: Implements secure authentication mechanisms, including OAuth 2.0 and multi-factor authentication (MFA), to protect access to AI services.

- Role-Based Access Control (RBAC): Uses RBAC to manage permissions, ensuring that users have access only to the data and services necessary for their roles.

- Compliance and Standards: Adheres to international security standards and compliance requirements, such as ISO 27001 and GDPR, ensuring robust data protection frameworks.

- Continuous Security Monitoring: This system employs continuous security monitoring and automated threat detection to identify and respond to potential security incidents promptly.

Data Security Rankings

Data security is critical when leveraging AI services, especially as an enterprise user who wants to leverage the capabilities to be more productive in the workplace, as it encompasses data privacy, encryption, access control, and compliance with regulatory standards. Each AI service provider offers a unique set of security features and data handling policies, below is a list of the providers and where I would place them in a ranking system that favours data sovereignty and ensures proprietary information is not used to train their public models.

- Azure OpenAI

Why It Ranks Highest:

- Maximum Control Over Data: Azure OpenAI allows enterprises to deploy AI resources within their own Azure tenant, ensuring complete data sovereignty.

- Sovereign Deployment Options: Organisations can maintain data within their regional boundaries, complying with local data residency requirements.

- Custom Security Policies: Enterprises can define and enforce bespoke security measures, tailoring data access and handling to their specific needs.

- Compliance Certifications: Meets numerous international standards (e.g., SOC 2, ISO 27001, FedRAMP), providing assurance of robust data protection practices.

- AWS AI

Why It Ranks Highly:

- Strict Data Ownership Policies: Customers retain full ownership of their data, and it is not used for training AWS models unless explicitly opted in.

- Comprehensive Encryption: Utilises AWS Key Management Service (KMS) for data at rest and TLS for data in transit.

- Continuous Monitoring: Services like AWS CloudTrail and Amazon GuardDuty provide real-time monitoring and threat detection.

- Compliance Programs: Adheres to a wide range of international and industry-specific standards, including GDPR, HIPAA, and PCI DSS.

- Microsoft 365 Copilot

Why It Ranks Well:

- Data Sovereignty: Ensures that all data accessed through Microsoft Graph is not used to train foundational LLMs, maintaining strict data sovereignty.

- Advanced Threat Protection (ATP): Protects against malware, phishing, and ransomware.

- Multi-Factor Authentication (MFA): Adds an extra layer of security for accessing AI services and data.

- Compliance and Governance: Comprehensive support for global regulations like GDPR, CCPA, and HIPAA through built-in tools.

- Anthropic’s Claude

Why It Ranks Favourably:

- Ethical Data Handling: Designed to ensure that user data is not used beyond service provision, aligning with ethical standards.

- Robust Encryption and Access Controls: Employs strong encryption (TLS 1.2+ and AES-256) and granular access controls to protect data.

- No Training Data Inclusion: Guarantees that data processed is not utilised for further training unless explicitly agreed upon.

- Data Minimisation: Collects only necessary data and ensures secure disposal after use.

- IBM Watson

Why It Ranks Respectably:

- Data Confidentiality: Ensures customer data is treated with the highest level of confidentiality and not used beyond agreed services.

- Customisable Data Policies: Allows organisations to define their own data handling and retention policies through contracts.

- Secure Development Lifecycle (SDL): Integrates security into every phase of product development.

- Compliance and Auditing: Adheres to global standards like GDPR, HIPAA, and FedRAMP, with comprehensive auditing capabilities.

- OpenAI

Why It Ranks Moderately:

- Temporary Chats: Ensures that conversation data is not used for further training of models, enhancing data privacy.

- Customisable Retention Policies: Offers enterprises the ability to specify data storage durations and access conditions through contracts.

- Robust Encryption: Uses industry-standard protocols (TLS 1.2+ and AES-256) for data protection.

- Compliance: Adheres to regulations such as GDPR, CCPA, and HIPAA, with available compliance certifications.

- Google Gemini

Why It Ranks Lower:

- Data Training Policies: Does not inherently guarantee that user conversations won’t be used for model training unless specific contractual agreements are made.

- Data Anonymisation: Implements techniques to reduce personal data exposure but relies on explicit agreements for data exclusivity.

- Strong Security Measures: Utilises encryption (TLS and AES-256) and advanced IAM policies, but the necessity for explicit agreements to prevent data training poses a risk.

- Compliance Certifications: Meets standards like ISO 27001, SOC 2, and GDPR, but data exclusivity requires additional negotiation.

- GROK

Why It Ranks Lowest:

- Real-Time Encrypted Data Processing: Ensures data is encrypted in real-time, protecting sensitive information during processing.

- Secure Authentication and Anonymisation: Implements secure authentication mechanisms and advanced anonymisation techniques.

- Data Exclusivity Options: Offers enterprises the ability to keep data exclusive, but as a newer or more specialised platform, it may not yet have the extensive compliance certifications and proven track record of longer-established providers.

- Compliance and Standards: Adheres to international security standards like ISO 27001 and GDPR, but continuous monitoring and real-time processing may introduce additional complexities.

Summary of Rankings

- Azure OpenAI

- AWS AI

- Microsoft 365 Copilot

- Anthropic’s Claude

- IBM Watson

- OpenAI

- Google Gemini

- GROK

Key Considerations for Data Governance and Security:

- Data Sovereignty: Ensuring that data remains within the user’s regional boundaries and complies with local regulations.

- Data Usage Policies: Guaranteeing that proprietary information is not used for model training or further development unless explicitly permitted.

- Encryption Standards: Utilising robust encryption protocols for data at rest and in transit.

- Access Controls: Implementing strict access management to ensure only authorised personnel can access sensitive data.

- Compliance Certifications: Adhering to international and industry-specific standards to meet legal and regulatory requirements.

- Customisation and Control: Allowing enterprises to define and enforce their own data handling and security policies.

When selecting an AI service provider, it is crucial to thoroughly assess these factors in the context of your organisation’s specific needs and regulatory obligations. Ensuring that the chosen platform aligns with your data protection requirements will safeguard sensitive information and maintain trust in your AI-driven initiatives.

Prompt Engineering

Prompt engineering involves designing and structuring input prompts to guide AI models toward producing high-quality, relevant, contextual outputs. It involves crafting instructions that direct the AI and improve the model’s ability to understand complex queries.

Definition:

Prompt engineering involves crafting well-structured inputs (prompts) to maximise the quality and relevance of AI-generated outputs. Users can guide AI systems to generate precise and contextually relevant responses through refined and detailed instructions.

Importance:

The effectiveness of AI largely depends on how clearly and precisely it is instructed through prompts. A well-designed prompt can enhance AI’s capability to understand and generate high-quality responses, while a poorly designed prompt can lead to irrelevant or incorrect outputs. Mastering prompt engineering is, therefore, crucial to improving AI interactions and achieving desired results.

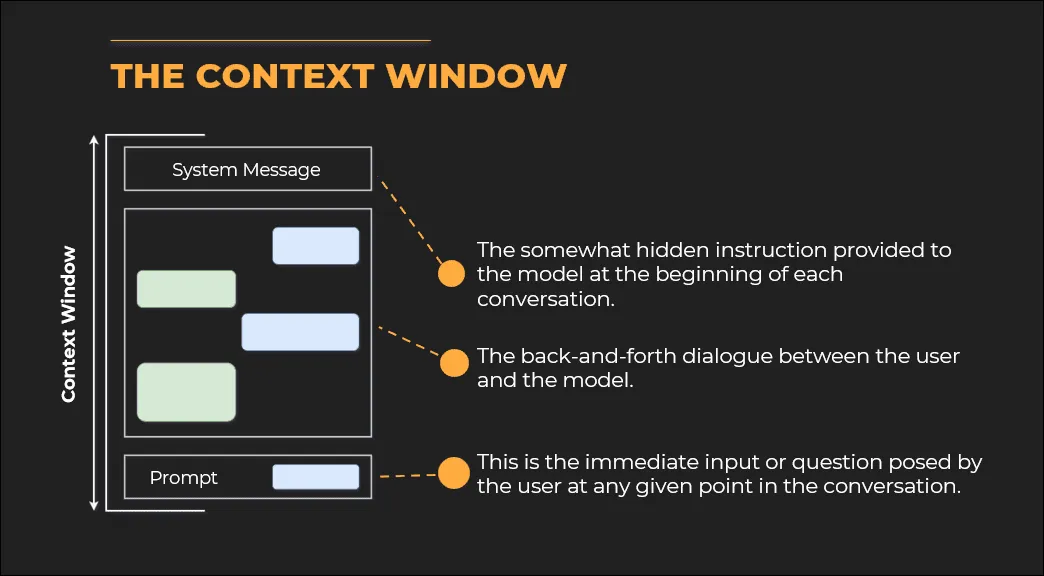

The Context Window

The concept of a context window in ChatGPT refers to the amount of information or text that the model can consider when generating a response. The context window is essentially a buffer of memory that includes all the input the model uses to produce its output in a given session. This input typically consists of three key components.

- System Message: This is a hidden instruction provided to the model at the beginning of each conversation. It helps set the tone, style, and guidelines for the model’s behaviour. The system message might include details like: “You are ChatGPT, a helpful assistant.” While users don’t see this message, it influences how the model responds, ensuring it aligns with its intended role.

- Conversation History This includes the back-and-forth dialogue between the user and the model. Every time a user sends a prompt, both the user’s inputs and the model’s previous responses are stored in the context window. The model uses this historical information to maintain the flow of the conversation, remember facts from earlier, and ensure coherent responses. As the conversation grows longer, this history occupies more of the context window.

- User Prompt: This is the immediate input or question the user poses at any given point in the conversation. It is the direct request that the model responds to in that instance. The user prompt is a significant part of the context, as the model needs to address it in its response.

How the Context Window Works

The model doesn’t have unlimited memory. It can only consider a limited number of tokens (words or characters) in its context window. For GPT-4, this token limit can vary depending on the specific version used. For example, the standard GPT-4 model can process up to 8,000 tokens, while some versions can handle up to 32,000 tokens. Truncation: Older messages are dropped from the context window when the conversation history plus the user prompt exceeds the token limit. This means the model might “forget” earlier parts of a long conversation if it exceeds the token limit.

Impact of the Context Window

By considering past exchanges and instructions, the model can generate more contextually appropriate and personalised responses, making the interaction feel more natural. Limitations: However, when the conversation grows too long and earlier parts are dropped from the window, the model may lose track of important information discussed earlier, leading to less coherent or relevant responses in extended interactions.

The context window is a dynamic memory buffer that includes the system message, conversation history, and user prompt. It allows the model to generate context-aware responses but is limited by its token capacity.

The Basics of Effective Prompt Engineering

Several foundational strategies guide prompt engineering. Users can enhance the performance of AI models by focusing on simplicity, specificity, and context.

Start Simple

When interacting with AI, begin with clear, concise prompts and iterate based on the results. Avoid overcomplicating the initial prompt; start with simple instructions and gradually add complexity as needed. This approach allows you to see how the AI responds and adjust accordingly.

Be Specific

Specificity is key to minimising irrelevant or generalised outputs. Include detailed instructions, such as the desired tone, format, and key points you want the AI to address. Being specific helps the AI generate the most helpful response.

Context Matters

Providing the AI with adequate context is essential for ensuring that its responses are relevant and accurate. The more context you provide, the better the AI will understand the situation or problem, allowing for more insightful responses.

Key Techniques to Improve Prompts

Refining prompts through specific techniques can significantly enhance the quality of AI outputs.

- Iterative Prompting - Refine responses by asking follow-up questions or requesting clarifications. This process allows for dynamic, continuous improvement of the prompt based on received outputs.

- Chain-of-Thought Prompting - Break complex queries into smaller, manageable parts to guide the AI step-by-step. This helps the AI approach multi-step problems more systematically.

- Question Refinement Pattern - Ask the AI to suggest better questions for unclear queries, improving the prompt’s precision. This meta-cognitive approach encourages the AI to assist in refining the prompt itself.

Advanced Techniques

As users become more experienced, employing advanced prompt engineering techniques can lead to more precise outputs.

- Persona Setting - Define a specific role or perspective (e.g., “As an SEO expert…”) to tailor the AI’s responses. This technique allows the AI to respond from a specific point of view, making responses more contextually appropriate.

- Template Filling - Use placeholders to structure prompts for dynamic content creation. This technique is beneficial in automated emails or product descriptions. It provides flexibility, allowing for quick variations of similar content.

- Prompt Re-framing - Subtly rephrase prompts to explore nuances and get varied responses. Prompt re-framing encourages creativity and helps users view problems from multiple angles.

Common Pitfalls and How to Avoid Them

Prompt engineering requires attention to detail. Here are some common pitfalls and strategies for avoiding them:

Vague Prompts

Lack of clarity leads to poor outputs. Be precise about tasks and expected outcomes. Define your expectations to guide the AI more effectively.

Lack of Examples

Without specific examples, AI responses can be generic. Include examples to guide the AI. Providing examples helps the AI understand the expected output style and structure.

Misaligned Persona

Ensure your persona matches the intended audience for more accurate, resonant responses. A mismatch can lead to irrelevant or off-tone results.

Effective Prompting Patterns

Certain patterns of prompting can be particularly effective in specific scenarios:

- Forecasting Pattern - Feed raw data for AI to make predictions. Ensure you give adequate background data.

- Cognitive Verifier Pattern - Ask the AI to list factors to consider before answering a complex query, ensuring thoughtful responses.

- Interactive Role-Playing - Engage in back-and-forth interactions, such as collaborative storytelling, for creative applications. This is particularly useful for creative writing, marketing, or simulations.

Improving Response Quality

Maximising the quality of responses requires refining the prompt with additional elements:

Task Definition

Always clearly define the task you want the AI to perform, including the expected response format (e.g., blog post, list, summary). A well-defined task ensures the AI understands the objective and produces a more appropriate result.

Tone and Style

Specify the desired tone and style of the output, such as formal, conversational, or technical. This ensures that the AI generates a response that aligns with your expectations and the needs of your audience.

Format Specifications

If you need the output in a specific format (e.g., bullet points, structured paragraphs, headings), include these instructions in your prompt. Precise format specifications can significantly improve the clarity and usability of the AI-generated response.

Case Study: Prompt Engineering in Action

To illustrate the effectiveness of prompt engineering, let’s explore a real-world scenario. • Scenario: Generating a personalised email for a marketing campaign. • Prompt: “Create an email to promote our new AI product.” • Context: “Targeting tech enthusiasts familiar with AI.” • Persona: “Tech-savvy marketer.” • Format: “Email format with catchy subject, intro, body, and CTA.” • Example: Highlight the product’s unique features and benefits (Slash Co). The AI will generate personalised marketing emails focusing on the product’s strengths for the target audience.

Practical Tips for Continuous Improvement

Prompt engineering is an evolving skill. To ensure continuous improvement:

Experimentation

Prompt engineering is an iterative process. Regular experimentation and adjustment of prompts based on the AI’s responses can improve outcomes over time. You can fine-tune the process and achieve better results by experimenting with different prompt styles and techniques.

Model Updates

Always use the latest AI models to stay ahead, as they leverage the most recent training data and are better equipped to handle more complex queries and generate higher-quality responses.

Collaborative Learning

AI can assist in refining its outputs. Using its feedback, you can progressively improve prompts, turning AI interactions into a collaborative learning process. Encourage the AI to self-improve by asking it to reflect on and refine its responses.