Table of contents

Open Table of contents

Why bother with local models?

Privacy, cost, latency and let’s be honest, the sheer giddy thrill of hearing your laptop fans spin up like a small jet engine.

| Factor | Cloud LLM API | Local LLM |

|---|---|---|

| Unit cost | £0.002–£0.12 / 1 k tokens (varies by model) | Electricity and a half-decent PSU |

| Latency | 100-400 ms | 5-20 ms (RAM permitting) |

| Data residency | Largely dependent on setup | Your own aluminium chassis |

| Fine-tuning | Often pay-walled | Full control, LoRA all the things |

| Scaling | Virtually infinite | Limited only by how loud your GPU fans get |

Prerequisites

- .NET 8 SDK (or newer)

- Git + LFS – most GGUF weights sit on Hugging Face

- CPU with AVX2 (good) or GPU with ≥ 6 GB VRAM (better)

- ~8 GB spare RAM for a 3-4 B model (double that for Llama 3 8B)

Tip: If your CPU dates from the Windows 7 era, treat this guide as a polite nudge to upgrade.

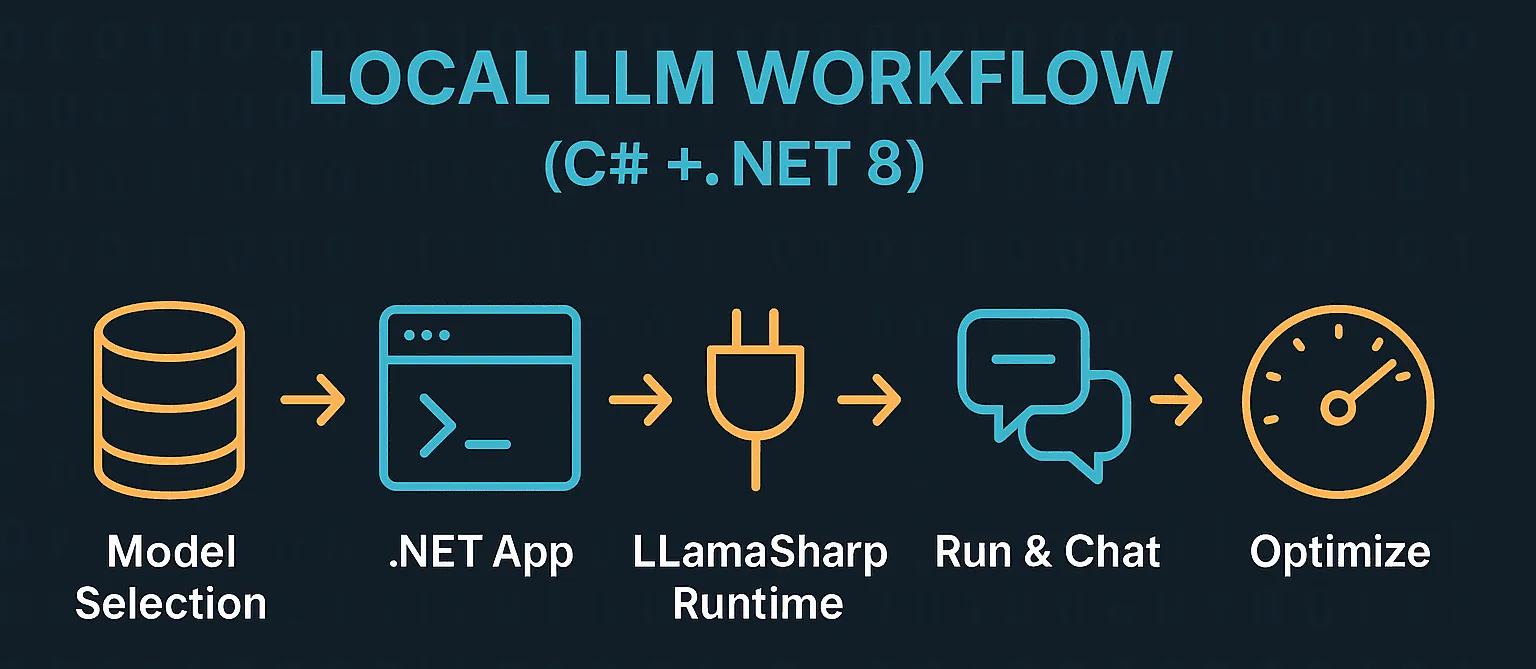

Step 1 – choose your model

| Model | Params | Disk (Q4_K_M) | What it’s good at |

|---|---|---|---|

| Phi-2 | 2.7 B | ~1.8 GB | Tiny, creative writing, micro-tasks |

| Mistral-7B-Instruct | 7 B | ~4.2 GB | Chat, summarisation |

| Llama 3 8B-Instruct | 8 B | ~4.5 GB | General purpose, fewer hallucinations |

| Code Llama 7B | 7 B | ~4.3 GB | Code completion / explanation |

Grab one with:

git lfs install

git clone https://huggingface.co/TheBloke/phi-2-GGUFCopy a *.gguf file (the q4_k_m variant keeps RAM usage sane) into a Models folder.

Step 2 – bootstrap a .NET console app

mkdir LocalLLM && cd LocalLLM

dotnet new console --framework net8.0

dotnet add package LLamaSharp --prerelease

dotnet add package LLamaSharp.Backend.Cpu # or …Backend.Gpu.Cuda for NVIDIADrop your chosen *.gguf into ./Models.

Step 3 – wire in the runtime

using LLama;

using LLama.Common;

var modelPath = Path.Combine(AppContext.BaseDirectory, "Models", "phi-2-q4_k_m.gguf");

var parameters = new ModelParams(modelPath)

{

ContextSize = 1024,

Seed = 1337,

GpuLayerCount = 0 // bump this if you added the CUDA backend

};

using var model = LLamaWeights.LoadFromFile(parameters);

using var context = model.CreateContext(parameters);

var executor = new InteractiveExecutor(context);

Console.Write("You : ");

while (true)

{

var user = Console.ReadLine();

if (string.IsNullOrWhiteSpace(user)) break;

await foreach (var chunk in executor.ChatAsync(user))

Console.Write(chunk);

Console.WriteLine("\n\nYou : ");

}First-run tax: LlamaSharp has to compile a BLAS kernel; subsequent launches are instant.

Step 4 – run, chat, repeat

dotnet runYou : Why do programmers prefer dark mode?

LLM : Because light attracts bugs!Yes, I know it’s one of the oldest jokes out there, I don’t apologise.

Performance tips

| Quick win | Impact |

|---|---|

| Quantise down (Q4_K_M) | 50 % less RAM, ≈ 95 % quality |

| Off-load N layers to GPU | 2-4× tokens/sec |

| Increase batch size | Better throughput on long prompts |

| n_ctx ≤ 1 k | Keeps VRAM sane on 6-8 GB GPUs |

Troubleshooting & FAQ

Unsupported CPU instruction set

Your processor is pre-AVX2. Compile llama.cpp with -march=native, or, you know, embrace that new-hardware smell.

Memory usage explodes past half a prompt

Lower ContextSize, or grab a q5_0 / q4_0 model file. RAM is a harsh mistress.

Can I still use ML.NET + ONNX?

Sure—BERT-style encoders run great in ONNX. For chatty generative use-cases, GGUF + LlamaSharp is simply more ergonomic in 2025.

Next steps

- Wrap in ASP.NET – expose a

/v1/chat/completionsendpoint. - Add function calling – LlamaSharp 0.12 ships native function-tool mapping.

- Fine-tune – LoRA adapters +

mlf-coremake weekend model-training a thing. - Desktop ship –

dotnet publish -r win-x64 -p:PublishSingleFile=trueand hand QA an EXE.

Wrap-up

Local LLMs have graduated from science-project status to genuine productivity boosters. With C#, the .NET SDK, and a few NuGet packages you can spin up a private, zero-cost chatbot before your CI pipeline finishes compiling main. (That’s a developer joke, I promise.)

Ping me on whatever social network is still cool by the time you read this and show me what you build!